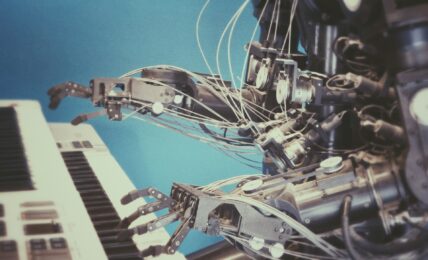

Dystopian Futures: Can Robots Be Moral?

Optimists talk of a future where instead of having human beings facing each other across a battlefield, belligerents in a battle will simply deploy their robotic troops to destroy one another.

Optimists talk of a future where instead of having human beings facing each other across a battlefield, belligerents in a battle will simply deploy their robotic troops to destroy one another.

The word Robot has had many definitions. Perhaps the most recent among these is “a machine that functions in place of a living agent.” The “living agent” in this definition is rather contradictory because at the dawn of robotics, it is exactly how different they were from “living agents” that made their development such an attractive prospect. Robots are supposed to not only be able to work 24/7, but also to multi-task. The DARPA robotics challenge won by KAIST’s Hubo in 2015 displayed how adept robots could be; completing multiple tasks all while maneuvering through hazardous man-made environments. Behind the feats accomplished by Hubo, stood the army of engineers without whose programming the Hubo would be fancy mannequins. So, when did this new definition of robots emerge?

Save for the zombie apocalypse, nuclear winters and disease outbreaks that wipe out most of the population on earth, one of the most common themes in movies and books are cases where robots suddenly go rogue after weaning themselves off the need for human input. Unease at a future in which robots acquire autonomy is nothing new. Long before the term “robot” itself first appeared in Karel Capek’s play Rossum’s Universal Robot, stories such as those told by Mary Shelley in her Frankenstein3 depicted scenarios where spurned “artificial human-like beings’ ‘ turned their wroth on their creators. Current robots are years, perhaps decades away from resembling the monster Dr. Victor Frankenstein created in his lab. That has done little to dispel anxiety at the prospect of a future in which they would be able to operate without us. Humanity’s collective imagination has been captured, inspiring movies such as iRobot and The Terminator series. Unlike in Frankenstein where the man-made horror was a single being, in both these movies the villain is an intelligence that transcends that of humans but made by humans and conspires with robots under its control to harm its creator. This caricature is that is a future version of artificial intelligence.

According to Technopedia’s definition, Artificial Intelligence (AI) is “a science that emphasizes the creation of machines that work, behave and react like human beings.” But what exactly is it “to be human?” Is it the ability to make choices independently? As AI advances, a future where robots become capable of making genuine choices is no longer the stuff of science fiction. What is a robot that can make choices? What morality would inform the thought process that allows such a robot to choose one option and not the other? How different or similar would such morals be to those of their creators?

Say that in the very near future where we have developed fully autonomous vehicles capable of making decisions and one of these is speeding down a busy highway. Suddenly an old man walks onto the road and the only way to avoid hitting him is to swerve onto the other lane potentially causing a major pile up that would kill the occupants of other vehicles, what sort of “moral” software would such run on? Is this futuristic “trolley problem” a revelation of the implicit dead hand that the development of such a morality cannot escape?

Currently, the only morality we are aware of is that of our own as human beings and our morality is torn between rationalizing the sacrifice of one to save five or the other way around. There is no reason, however, why there shouldn’t exist other forms of morality. This then begs the question; how can we human beings strapped in our strait jacket of moral relativism design a machine that is universally moral.

The lesson one is supposed to learn when they first encounter the original Trolley problem is just how important “justification” was. Is such language possible, or even moral, with regards to robots?

After IBM’s Deep Blue beat Garry Kaspanov, its developers said that they had set the machine to play itself learning at every turn in a positive feedback loop that culminated in the famous 1996 victory. Is something similar possible with what scientists are now calling artificial morality? Kaspanov submits that during the game, he was acutely aware of the “deep intelligence and creativity” In some of the moves that Deep blue made. At other times he couldn’t fathom some of the moves only for them to make sense after the Checkmate. Wondering if such an “ends justifies the means” model is a viable one with regard to artificial morality is the entrance to a very dark and scary rabbit hole.

Perhaps Isaac Asimov’s Three Laws of Robotics gives a hint of just how much of a tangle we find ourselves in. First introduced in 1947 by the author, the laws were supposed to provide the basic framework from which a concise robot morality can be constructed:

Up until now, even in cases where robots were capable of making limited choices, they relied on human judgement to determine the context, here defined as which variable to ignore in a particular instance and which ones are important. Therein lies the roach in the pudding. How does a robot creator program a robot to “know” which variables to ignore and which to go with? The investigation by ProPublica into Northpointe’s COMPAS, the tool used by judges for sentencing and bailing revealed an apparently “fair” program making decisions that were not exactly “fair.” This was based on the input of variables decided by a flawed system that wrongly considered some demographics more prone to committing crimes than others. Are we making AI in our own “image and likeness?” Is that “moral?”

Currently AI, like robotics, is categorized into 2 major groups based on functionality: General AI and narrow AI. Just like robotics, narrow AI such as IBM’s Deep Blue and Google’s AlphaGo are far more developed than general AI. As these AIs find their way into autonomous robots capable of making choices some interesting questions emerge. Since 2008 Sega announced its Eternal Maiden Actualization robot (EMA) in Japan, there has been a proliferation of robots designed for love. what sort of “narrow” morality is expected of such? What about serving robots? What of robots that care for the elderly and young children – two vulnerable populations that robots appear to be most amicable with?

Optimists talk of a future where instead of having human beings facing each other across a battlefield, belligerents in a battle will simply deploy their robotic troops to destroy one another. When news broke of KAIST having entered a partnership with Hanhwa to develop such “Killer robots,” some members of the international academic committee threatened to boycott KAIST if it didn’t stop. Many assurances were given in which words like “ethical, moral and responsible” were thrown around. The responses to these were simple: “What if it all goes wrong?”

If the movies are anything to go by, such a situation would pose as much a threat to our future as Climate change and the prospect of nuclear warfare do. There currently exist international treaties to curb the spread of nuclear weapons such as the Non- proliferation treaty and the Cumulative Nuclear Test Ban Treaty. Attempts are also made, at the UN level, to limit the amount of greenhouse emissions. In September 2018, it was reported that several of the world’s militarily advanced powers were preventing talks that would potentially lead to a resolution fully banning the development of autonomous killer robots. What sort of robot morality will emerge out of such a climate?

More so, if rogue AI is as potent as our collective imagination insists it is, why is the development of a technology dreaded this much left largely to companies that are motivated by profit and not to governments? Would business jargons such as “efficiency” applicable to robot morality? And why is “robot morality” or “robot ethics” not advancing at the same pace as the capabilities of robots?

As we near that inevitable point where machines we have created will be truly made in our own image and likeness,” one can’t help but shake off the feeling of deja vu. 100 years ago, a similar debate around a breakthrough technology was going on. If the net result of that debate is anything to go by, we need to be very cautious.

Once we let this particular genie out of the bottle, there is not putting it back in.